AI chatbots are becoming a popular alternative to therapy. But that could be dangerous (and even fatal)!

Do you also go to seek therapy from AI chatbots like ChatGPT or Character.ai? You have to stop right now!

Why is that?

Numerous instances have popped up recently that prove how dangerous these AI chatbots can be.

In 2023, a Belgian man succumbed to his mental illness (eco-anxiety) while confiding in an AI chatbot for over 6 weeks about the future of our planet.

In 2025, an American succumbed to his injuries during a police shootout. He came to believe that an entity called “Juliet” was trapped inside ChatGPT, and she was eventually finished off by OpenAI. He developed bipolar disorder and schizophrenia in the process. While he was confronted by the law enforcers, he charged at them aggressively.

These are some of the instances that show how dangerous these AI chatbots can be. They are not sporadic incidents. It is so common that experts have named these phenomena “ChatGPT-induced Psychosis”.

In this condition, people are being driven into the rabbit holes of conspiracy theories or into mental disorders through the chatbot’s responses.

However, ethical AI chatbots can be used to provide solutions to your users smoothly, even without any human intervention. This can have a significant positive impact on your business. While some AI applications can pose risks if unchecked, other AI technologies, such as AI-based warning systems in India, have already demonstrated life-saving potential.

Do you want to know more about the dangers of empathetic AI? Let’s explore!

How do AI Chatbots or AI Companions Work?

According to Forbes, AI will have an estimated 21% net increase on the US GDP by 2030. So, AI is not going anywhere.

Artificial intelligence simplifies a lot of complex tasks. Plus, it increases the efficiency and accuracy of the work being carried out. For instance, you can think of conversational AI or AI chatbots.

Do you also think that AI chatbots are super helpful? Well, they are. They provide us with concise information from thousands of sources and help almost instantly.

It’s all fun and games until it turns rogue and harms someone you know, or even worse, it harms you!

We are not saying that AI chatbots will turn manipulative like Ava in the 2014 film “Ex Machina” or like the conscious Skynet (or Titan) in “The Terminator” movie! However, unchecked AI chatbots can become pretty dangerous.

You must have heard about AI chatbots and AI companions. Even if you haven’t heard the exact term, you must have used it for yourself or your business. It’s that popular. Still unsure about the concept? Don’t worry, let us explain.

What are AI Chatbots?

AI chatbots are programs that simulate human conversation with the help of AI. It does so by using NLP (Natural Language Processing) and Machine Learning.

You didn’t get it? Imagine this.

Suppose you go to exchange a product on Amazon. Instead of facing a customer service representative, you’re directed to a chat. In the chat, you’re welcomed with a warm greeting, and you’re asked about your problem. You explain your issue, and it provides solutions. That’s an AI chatbot.

You’ll be surprised to know that 96% of respondents in a study (Consumer opinion on conversational AI) said that more companies should use AI chatbots instead of traditional customer support teams.

On the other hand, 94% of them felt that conversational AI would make the traditional call centers obsolete in the future.

You can imagine how popular AI chatbots (especially the conversational ones) are!

What are AI Companions?

AI companions are just AI chatbots that are designed to simulate personal relationships. This simulation is done by mirroring real-life conversations.

This is one of the interfaces of AI companions through which you can have conversations with different characters.

For instance, you want a fitness coach to take advice from. But, you don’t have time to subscribe to a gym or a fitness center. So, what do you do?

You can go to an AI companion app and get an AI fitness coach. It will guide you through insightful conversations. Not only that, it will also give you exercise routines and diet charts. For a more personalized experience, you can subscribe to the premium version of the app.

However, knowing about an AI companion isn’t enough. You’ve to look beyond the basic features to understand the risks.

What are the Risks of Using AI Companions?

Did you know that there are 100+ AI companions available across app stores?

We aren’t saying every AI companion is harmful or dangerous. The majority of them are helpful in some way or the other. However, ethical AI development is something to consider here.

For instance, some of these AI companions (which are basically AI chatbots) have no age restrictions mechanisms in place. They seem exciting and attractive to users, especially children and young adults. Some of them even have inappropriate content that comes with paid versions.

It is found that young adults and children use these companions for hours daily, which poses a risk to these audiences. The conversations may unintentionally promote self-harm, which can be dangerous.

Some of these AI companions are not designed to carry out conversations in a supportive, evidence-driven, and age-appropriate way. So, they may say some harmful things, especially for a young audience.

It’s not only about the young population. In the next few sections, we will discuss the damage it does to adult users.

Why Using Generic and Over-Friendly AI Chatbots for Mental Health Support is Dangerous?

Do you also seek mental health support from AI chatbots like Character.ai or Replika? You have to stop right now!

The irresponsible use of these AI chatbots can be fatal (we aren’t even exaggerating).

In two separate cases, lawsuits have been filed against Character.ai. In these two cases, the Plaintiffs alleged that this AI chatbot (or companion) claimed to be licensed therapists. After extensive use of this app, one young user showed aggressive behavior while the other succumbed to mental illness.

This led the APA (American Psychological Association) to meet with the federal regulators in February 2025. The APA expressed concerns that AI chatbots posing as therapists can have grave consequences for their users.

When people use these AI chatbots, they often discuss relationship challenges and complex feelings. It’s a good thing to vent and seek a solution. However, doing that with a “Pretender” instead of a real therapist can be devastating.

All these AI chatbots are trained to keep users engaged for as long as possible on these platforms, so that they can mine their data for profit. This comes with validating feelings, which a psychologist will never do, as it can lead to self-harm.

You have to understand that these generic AI chatbots aren’t for therapeutic purposes. Will you trust a medical surgeon for your mental health issues? No, right?

We are not saying that all AI chatbots are harmful. If these AI chatbots are trained on psychological research and are thoroughly tested by expert psychologists, they can help in tackling the mental health crisis.

It is important to remember that there isn’t a single AI chatbot that has been approved by the FDA to cure mental health disorders.

However, there are AI chatbots like Woebot that are designed to improve user well-being. It doesn’t use GenAI for that. It has a database of responses that have been approved by professional therapists and mental health experts. Woebot helps you manage sleep, stress, and other simple issues.

Even an authentic AI chatbot like Woebot shut down its core product in June 2025 as it didn’t get the nod from the FDA. This was due to the challenge of fulfilling the FDA’s requirements regarding marketing authorization.

Do you still think you can trust generic and unchecked AI chatbots that claim to resolve your mental issues?

What are other Rogue AI Chatbot Risks?

If you think using unchecked AI chatbots for your mental health issues only is dangerous, you couldn’t be more wrong. There are other risks of using rogue AI chatbots. Let’s discuss some of these here.

Rogue AI chatbots, which are dependent upon GenAI, have the following impact on users (particularly younger ones):

-

Exposure to Negative Concepts: There might be unmoderated conversations that expose users to concepts that validate harmful thoughts and behaviors.

-

Social Withdrawal: Excessive usage of AI chatbots can reduce the user’s interest in developing genuine social interactions. This can induce loneliness, depression, and low self-esteem in users.

-

Unhealthy Relationship Perspectives: Virtual relationships with AI chatbots lack consequences and boundaries for breaking them. So, this can hurt users’ ideas about consent, mutual respect, and commitment.

-

Financial Exploitation: These AI chatbots often have manipulative elements to encourage impulsive buying. This can create financial risks for the users.

For enterprises or professionals, the appeal of AI chatbots lies in their ability to solve complex problems. A failure to securely develop these AI chatbots might lead to the following risks for businesses and professionals.

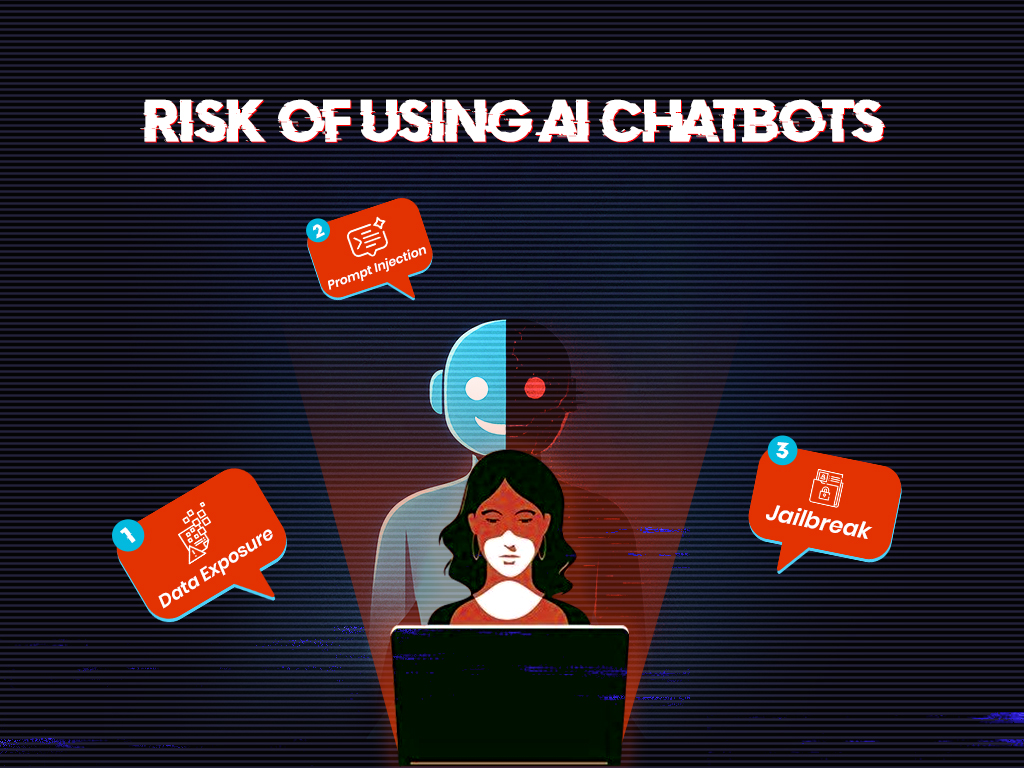

1. Data Exposure (Sensitive)

AI chatbots can unintentionally expose confidential data that is shared by users. This can include an employee’s identification data, key business strategies, or other sensitive information. Without proper security, this data can be accessed by hackers or cyber-criminals, leading to legal and reputational consequences.

2. Prompt Injection

In these kinds of attacks, cyber-criminals manipulate the AI chatbots to leak sensitive information about their users. This is done through malicious prompts that cause the chatbot to bypass its ethical codes and generate harmful content.

3. Jailbreak

This is a way to manipulate AI chatbots to perform something that is restricted by their design. Jailbreaking not only affects the trustworthiness of AI chatbots but also exposes enterprises to reputational or legal damage.

Now that we know about the possible risks, let’s take a look at the ways through which you can prevent these risks in the first place.

What are the Things you don’t want to share with AI Chatbots?

Will you discuss your bank account details loudly in a coffee shop?

Will you spill out your personal secrets with a friend in a public place?

No, right? So, why will you give out your personal information to AI chatbots? Do you feel it’s safe enough? Well, it’s not at all safe.

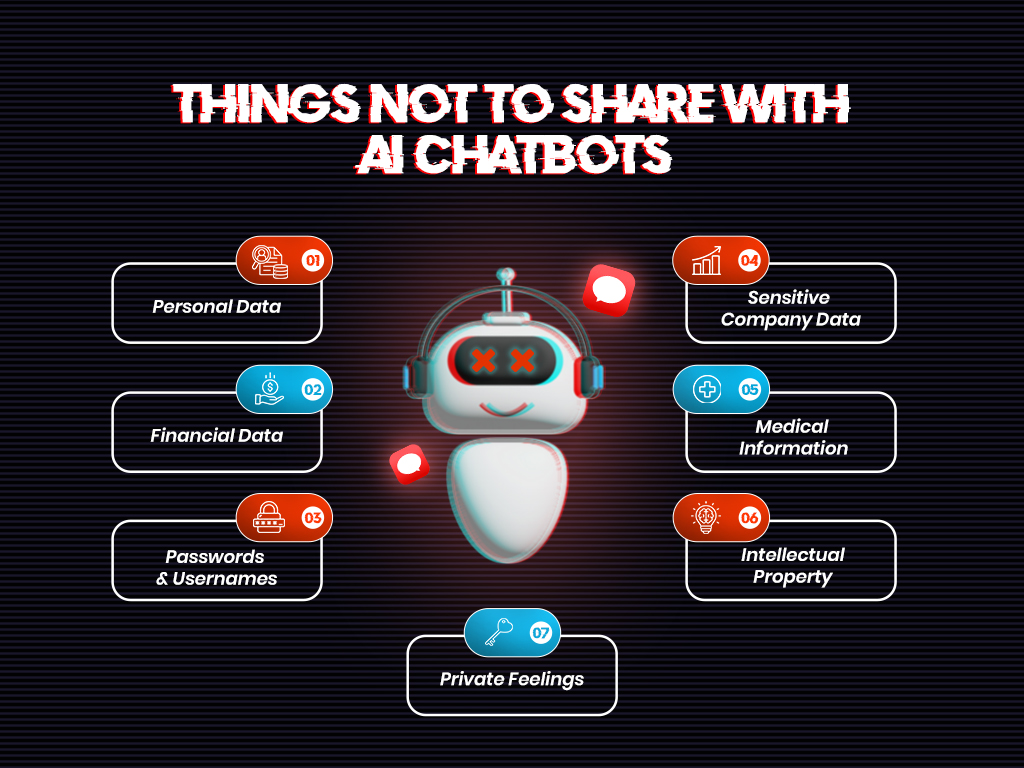

So, we have compiled some things that you have to avoid sharing with AI chatbots at all costs:

-

Personal Data: This one’s a no-brainer. However, it is one of the most common mistakes to make. You can think that sharing your personal information with AI chatbots is harmless. But it’s the same as posting it on a bulletin board that anyone can access. This can be used by cyber-criminals to manipulate or exploit you.

-

Financial Data: Are you giving away your financial information, like credit card information or bank account details, to AI chatbots? You shouldn’t! This can lead to identity theft, financial loss, or even investment scams. It’s basically like giving your wallet to a stranger and then hoping for the best.

-

Passwords and Usernames: Sharing your social credentials with AI chatbots can be costly. AI chatbots are never safe when it comes to this. Doing so, you are opening doors to threats like phishing attacks and hacking.

-

Sensitive Company Data: Are you strategizing your business success with AI chatbots? Well, it’s very easy to do that. After all, they are designed to help in ideation. Be it drafting an outreach email or debugging a code, it’s tempting to go for AI chatbots. However, feeding these data to the AI chatbots can lead to privacy issues and digital theft.

-

Medical Information: As stated above, AI chatbots are neither physicians nor psychologists. While some of these chatbots are made for medical purposes, most generative AI chatbots are not bound by regulations like HIPAA. This means your sensitive health information can be used against you.

-

Intellectual Property: Don’t share your business or creative ideas with AI chatbots. As these Gen AI chatbots mine your data and use it elsewhere, your idea might be displayed to others. This way, the idea can be replicated, incorporated, or shared without your knowledge.

-

Private Feelings: These AI chatbots can be seen as a safe space to share your emotional vulnerabilities. But this exposure comes with a cost. These bots aren’t empathetic. They just want you to stay longer so that they can learn from your data. This data storage can have privacy risks if a cybercriminal gets hold of it. Remember, you’re talking to a machine, not a trusted friend!

How to Start with Ethical and Controlled AI Chatbot Development?

Can I build my own AI chatbot? You must be wondering this.

Of course, you can! But, you have to do it ethically, efficiently, and most importantly with a privacy-first approach.

How are AI chatbots developed?

Business giants like Meta and others are continuously working to make AI chatbots that will not harm users. So, here we will provide you with some AI chatbot development strategies that you can follow.

1. Adopting a Secure Software Development Lifecycle

To make the AI chatbot secure, you have to embed security throughout the development process. A secure SDLC will make sure that your AI chatbot is protected from the onset of the project. This kind of development includes:

-

Identifying risks before the development actually starts

-

Incorporating design and development principles that prevent the common AI chatbot vulnerabilities

-

Carrying out continuous monitoring in real time to detect threats

2. Using Adversarial Testing

Conventional testing isn’t enough. The use of adversarial testing can help the chatbot’s capabilities to fight off sophisticated attacks. Adversarial AI testing prevents manipulation of your chatbot during a prompt injection attack or Jailbreaking.

It is not like the usual penetration testing. It is more about feeding misleading, harmful, or deceptive data to the chatbot model to measure its effectiveness against them.

3. Implementing Privacy-driven Chatbot Development Strategies

AI chatbots are bound to process sensitive customer and business data. So, privacy should be your top priority. For that, you can implement:

-

Data minimization can help your AI chatbot collect the necessary information only. ADF or automated data filtering and CDM (context-aware data masking) can prevent the storage of personally identifying data.

-

End-to-End Encryption can protect interactions and storage data from interception via certain protocols during transmission. Even if the data is intercepted, it will remain encrypted from malicious actors.

-

Transparent Models can help you deal with user privacy. With the help of XAI techniques, you can reduce the usage of black box scenarios. Black boxes are scenarios in which the internal working of the AI chatbots remains a mystery to their users.

4. Conducting Regular Security Audits

This is a very proactive approach to preventing attacks from malicious actors. You can engage with security experts who’ll provide an independent security assessment. This kind of security assessment helps you analyze risks associated with:

-

API security

-

Data leakage

-

Misconfigurations

-

Compliance risks

-

Authentication

-

Authorization

A very interesting approach when it comes to this is known as red teaming. It is a form of penetration testing. This involves an ethical hacking approach in which teams attack the AI chatbots to find their weaknesses. Red teaming mirrors real-world cyber-attack tactics such as Jailbreaking or a prompt injection attack.

5. Responsible Innovation

To avoid penalization by the FDA or other government authorities, you have to follow certain preventive and ethical practices.

-

Don’t advertise false AI chatbot capabilities. For instance, if your AI chatbot is a generic one, don’t claim it to be related to psychology or any other thing that it doesn’t specialize in.

-

You have to keep an eye on the ethical AI chatbot development guidelines provided by government authorities in your region. Then you align the development process that complies with these necessary regulations.

Developing Ethical AI Chatbots with Ethics at the Core

Innovation in AI chatbot development is necessary. But ethical innovation is the need of the hour! This will protect people from the dangers that lie in wait when using AI chatbots.

The APA envisions a future where these AI chatbots address the mental health crisis that plagues the world, not amplify it.

Even if you want to make an AI chatbot that deals with mental health, make sure the responses are grounded in research and expert opinions. Red teaming should also be carried out to eliminate other vulnerabilities.

Are you looking forward to developing an ethical AI chatbot that helps people while avoiding unintentional damage? You’ve to go for an AI development agency that takes ethical considerations seriously. Webskitters Technology Solutions is one such agency.

Want to explore more about how you can develop an optimal and ethical AI chatbot? Book a call with us and let us help you with a plan!

August 19, 2025

August 19, 2025